One of many great things about working at Alter Solutions is, through its extensive training program, the opportunity to learn new skills and technologies, such as, the fascinating field of Machine Learning (ML).

The proliferation of neural networks in recent years, allied to the slowing of Moore’s Law, led the emergence of hardware accelerators, such as, GPUs and, more recently, Tensor Processing Units (TPUs).

A TPU is a Google proprietary AI accelerator application-specific integrated circuit (ASIC) specialized for neural networks workloads, contrary to general purpose processors like CPUs and GPUs.

TPU was launched in 2015 and, in 2017, it was generally made available as a Google Cloud Platform (GCP) service, Cloud TPU.

At the time of writing, TPUs are the fastest and most efficient ML accelerators, especially for large models, with millions or even billions of parameters. Currently, many of Google products are powered by TPUs, including Google Photos, Google Translate, Gmail, and many others.

THE NEED FOR SPEED

The major mathematical operations required by neural networks workloads are essentially linear algebra operations e.g., matrix multiplications. Matrix operations are the most computationally expensive operations performed when training and inferring neural network models. For this reason, the main task of a TPU is matrix processing, which translates to a combination of multiplications and aggregations operations.

Figure 1 depicts the high-level architecture of a second generation TPU chip. At the heart of a TPU chip lies the Matrix Multiplication Unit (MXU). The MXU is capable of hundreds of thousands of matrix operations in each clock cycle. This is a significant increase compared to CPUs, only capable of performing up to tens of operations and, in case of a GPU, tens of thousands of operations per cycle capacity.

Figure 1 - TPU V2 high level architecture. Each processor has two cores with on-chip 8GB of High Bandwidth Memory (HBM) and one MXU.

Each MXU features 128 x128 Arithmetic Logical Units (ALU) connected to each other to perform the multiply-accumulate operations. This 128 by 128 physical matrix architecture is known as a systolic array. The systolic array mechanism is what allows MXUs to perform matrix multiplications at great speeds, achieving time complexity of O(n), instead of polynomial complexity when using sequential processors.

The on-chip High Bandwidth Memory (HBM) is used to store the weight (variable) tensors, as well as intermediate result tensors needed for gradient computations. Once the TPU loads the parameters from HBM to the MXUs, the MXUs compute all the matrix multiplications over the data without needing to access memory. This allows for a much higher computation throughput when compared with CPUs and GPUs, which need to access memory to read and write intermediate results at every calculation.

The vector unit is used for general computation, such as, activations, softmax, among others. The scalar unit is used for control flow, calculating memory address, and other maintenance operations.

Given the TPU architecture characteristics, to efficiently train models on the TPU, larger batch sizes should be used. The ideal batch size is 128 per TPU core, since this eliminates inefficiencies related to memory transfer and padding. And the minimum batch size of any model should 8 per TPU core.

Currently there are four generations of TPUs. The latest generation, TPU v4, can have up to eight cores, with each core having a maximum of four MXUs and 32GB HBM.

TRAINING ON CLOUD TPU

Cloud TPU is a GCP service that provides TPUs as scalable resources and it supports Tensorflow (TF), JAX and PyTorch frameworks. Access to TPUs by these frameworks is abstracted by a shared C library called libtpu, present on every TPU VM. This library compiles and runs programs in those frameworks and provides a TPU driver used by the runtime for low-level access to the TPU.

Cloud TPU provides access to single TPU boards and TPU pods or pod slices configurations. A TPU Pod is a collection of TPU devices connected by dedicated high-speed network interfaces and each TPU chip communicates directly with other chips on the same TPU device. The TPU software automatically handles distributing data to each TPU core in a Pod or slice. A TPU Pod can have up to 2,048 TPU cores, allowing large scale distributed training by distributing the processing load across multiple TPUs. Note that, single board TPUs are not part of a TPU Pod configuration and do not occupy a portion of a TPU Pod. Currently, Cloud TPU pods are only available by request.

Cloud TPU provides two architecture offerings:

- Cloud TPU Node: a compute engine that hosts TPU accelerators, only remotely accessible via gRPC, without providing user access;

- Cloud TPU VM: compute engine accessible to users e.g., via SSH, where it’s possible to build and run models and code directly in the TPU host machine.

Due to greater usability and, by allowing to take direct advantage of the TPU hardware without the need of an intermediate network connection, Cloud TPU VMs are recommended over Cloud TPU Nodes. However, there are cases where Cloud TPU nodes might be a better choice, e.g., if the entire ML workflow (e.g., pre-processing, training, evaluation, etc) runs in the same machine, it might be financially cheaper to limit the usage of a TPU host machine to the training step; for this, we can use the Cloud TPU API client library to programmatically:

- Find an available TPU node i.e., a node with status STOPPED;

- Start the available node before starting training step;

- And finally, stop the node once training step completes.

Keep in mind that training models on a Cloud TPU Node requires the model dataset, and other input files to be accessible from a Google Cloud Storage (GCS) bucket, ideally located in the same region as the TPU Node VM, to reduce network latency and costs. The model directory must also be accessible on a GCS bucket to save model training checkpoints. Otherwise, we get the error InvalidArgumentError: Unimplemented: File system scheme '[local]' not implemented

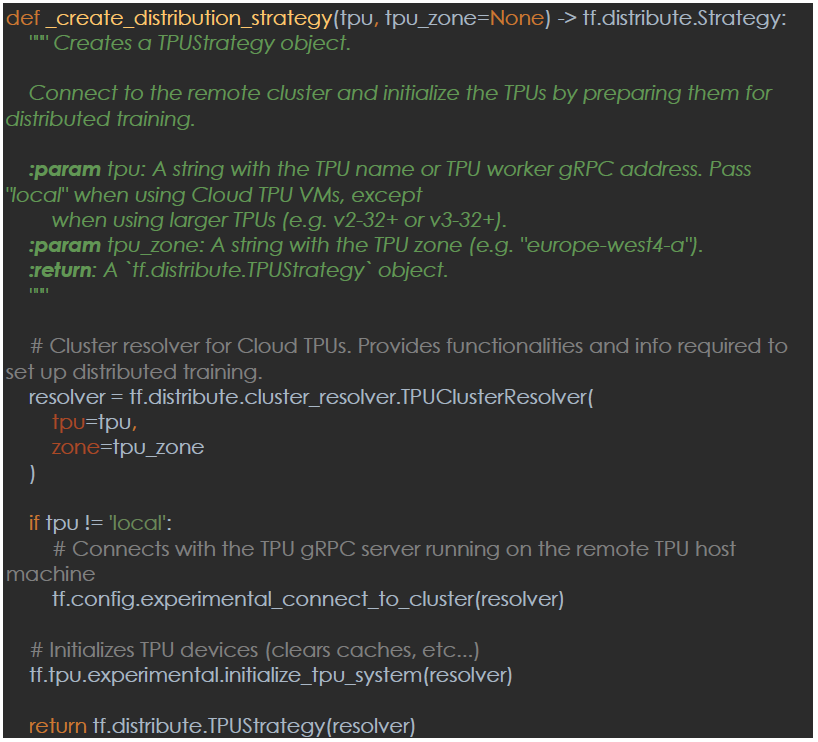

The code required to train TF models with TPUs is minimal and there are just few slight differences between Cloud TPU Nodes or Cloud TPU VMs training, as indicated in the function below:

The distributed strategy object returned by the above function provides an abstraction for distributing training across multiple TPUs. With this strategy, the model is replicated across each TPU device or worker. All of the model's variables are copied to each TPU processor. Before each training step, input data is sharded across different devices or replicas. The execution of each training step consists of the following:

- Each replica runs a forward and backward pass over its sources;

- Combine all replicas computed backward pass gradients using a reduction (e.g., sum/mean);

- Synchronize replicas variables by broadcasting the aggregated gradients to all the replicas.

This type of distributed training is known as all-reduce synchronous training.

To use a distribution strategy, the model creation code must be placed inside a strategy scope block, just below:

All the TF components inside the scope become strategy-aware. In practice, this means that model variable creation inside the scope is intercepted by the strategy. The TF components that create variables, such as, optimizers, metrics, summaries, and checkpoints must be initialized inside the scope, as well as any functions that may lazily create variables. Any variable that is created outside scope will not be distributed and may have performance implications.

When a TF Keras model is created inside a strategy scope, the model object captures the scope information. When the compile and fit methods are called, the captured scope will be automatically entered and the strategy will replicate the model variables (same weights on each of the TPU cores) and will keep the replica weights in sync using all-reduce algorithms.

Although its ok to put everything under the scope strategy, the following operations can be called outside the scope, e.g.: input dataset creation and model save, predict and evaluate.

CONCLUSION

As seen in this article, there is a straight relation between ML workloads and hardware. The choice between using CPUs, GPUs, TPUs or other devices, can greatly impact model performance regarding training and inference. Thus, its paramount to understand the purpose of these devices and how they work.

In a world where more and more software its powered by neural network models, TPUs can bring bigger breakthroughs by significantly reduce inference and training time, especially for large models that, without TPUs, usually take weeks or months to train.